Resist the urge to be impressed

Research from the early 2000s makes current AI look less cool

I’m taking a computer vision class this semester, and my favorite takeaways so far are the incredible research that was already being done in the early 2000s. After the deep learning explosion of the 2010s, it seems like these have been largely forgotten.

The improvement of image and video AI in the past couple of years has been impressive. But given their abilities now, we’ve had a good understanding of necessary underlying computation, which is based on signal processing and visual perception, for a very long time. Here are some examples that are still impressive today, especially considering the orders of magnitude less data and compute.

Video Textures (2000)

They take short videos and generate potentially infinite “video textures” (think Harry Potter moving portraits), using existing frames of the original video. The algorithm is simple: create a similarity matrix between all frames of a video, generate a probabilities for each transition based on similarity, and sample the next frame from the transition distribution to generate continuous videos. There are some extra tricks, but that’s its essence. In the video below, the red lines at the bottom indicate transitions to non-adjacent frames. Perceptually, it’s impossible to tell there are loops.

In the next video, they take 5 minutes of a fish swimming around in a tank, remove the background, extract the center position of the fish at each frame, and add an interactive component: the position of the user’s cursor, which contributes to selecting the next frame. Again, no new frames are being generated. They are basically creating a more complex graph between video frames and defining a good transition function.

The examples shown are all quite simple compared to what we see today, but scaling this up with some tricks would still result in some really cheap gaming or video editing tools (maybe this has already been done).

Project website.

Image Analogies (2001)

Style transfer got big in the mid 2010s with deep learning, but it turns out we already knew how to do this pretty well in 2001, without a ton of compute! Below is an example from the paper, taking images and making them watercolor-y.

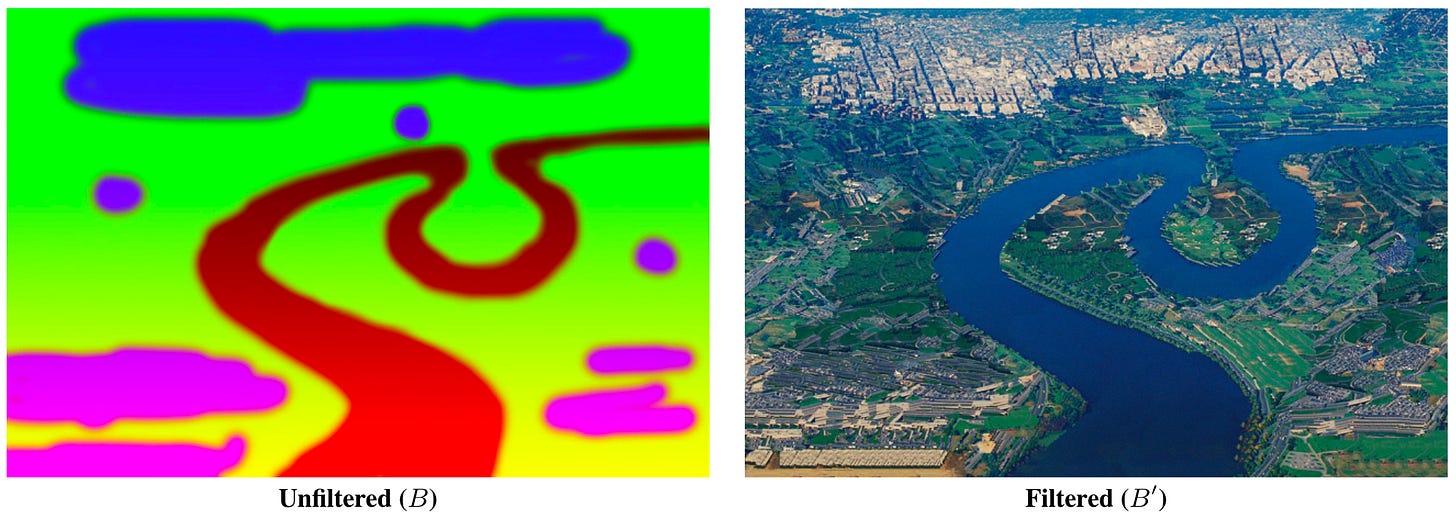

The method takes pairs of images A and A’, where A is the original and A’ is the transformed image. Given another image B that is in the same style as A, they generate B’. The algorithm is roughly as follows: apply a set of filters (classical signal processing) and construct feature vectors (information about color, luminance, etc.) for A, A’, B. To create B’, they look at the context around each pixel in B (considering all features, local neighborhood, and lower resolution filter outputs), and find the pixel in A that best matches everything. The pixel in B’ is then set to be corresponding pixel in A’. Remarkably, they only need one pair of images, not a huge training set, to do this!

To me, the coolest example is “paint-by-texture”:

More examples in the paper here.

Videorealistic Facial Animation (2002)

Deepfake lip-syncs (new mouth and maybe other head movements generated to new audio) have gotten scarily good, but there were already impressive results in 2002! Can you guess whether the video below was an original or generated from the model?

At the core of the method are two models: Model 1 morphs between seen frames to generate new frames, and Model 2 maps from audio to parameters representing mouth shape and texture. The two models are first trained on 15 minutes of a woman speaking pre-selected sentences. Then, given new audio, Model 2 outputs parameters that are fed into Model 1, which then generates new video that matches the given audio. Model 1 consists of optical flow, PCA, clustering, and graph traversal, and Model 2 is an optimization procedure. Although more complicated than the previous algorithms in this post, each step has clear theoretical foundations.

Deepfakes now are much more advanced (e.g. can capture more than just a still face and head), and I’m guessing require less source video for a good deepfake of a single person. But the improvements are negligible compared to the massive increase in data and energy required.

Here’s a video generated using a Teresa Teng song. By the way, the first video was generated by the algorithm.

Project website.

Gradient Domain Painting (2008)

The main contribution of this paper is the integration of existing image editing methods into a realtime tool. The methods include cloning and blending, and operate in the gradient domain of an image (classical image processing). Really cool demo video:

Project website.

All these methods were very cleverly designed, given the computational and data constraints of the time. They required deep understanding of signal processing, visual perception, and algorithm design, and the constraints actually led to more creative methods. In contrast, the way we perform these tasks today are with brute force, essentially throwing data at a gargantuan function approximator. I would argue that vast majority of the progress we’ve made is due to amazing feats of hardware and software engineering, not to fundamentally better understanding of the problems.

Emily Bender, a linguistics professor at the University of Washington, tells us to resist the urge to be impressed by AI (focusing on language), which I highly recommend reading. Indeed, we should be extremely skeptical whenever a tech company, or an AI expert with financial ties to a tech company, takes any strong stance on AI. They have a clear conflict of interest. Understanding where we were before the hype, and where we actually are now, is critical.

Setting aside concerns about massive computational resources for now, sure, current models are bigger, better, and faster versions of what I talked about in this post. But this all begs the question: what are the new models actually good for? In the next post, I will give in to the urge to rant about generative AI.